15 Jun 2016

Google Cloud Compute allows users to use virtual machines (VM) running in Google’s data centers and fiber network. Compute Engine’s tools enable scaling from single instances to global, load-balanced cloud computing.

gcloud-java is the Java idiomatic client for

Google Cloud Platform services. gcloud-java aims at providing Java developers with a smooth experience

when interacting with Google Cloud services. For infos on how to add gcloud-java to your project and start

using it see the project’s README.

Recently, gcloud-java added alpha support for Google Compute Engine.

In this post we will go through some of the main features that the client now supports.

Create a Persistent Disk and a Snapshot

Google Compute Engine’s VMs support persistent storage through disks. You can create persistent disks

and attach them your instances according to your needs. Compute Engine offers many public images that

can be used to create boot disks for your VMs with Linux and Windows operating systems (a

complete list can be found here).

Using gcloud-java you can easily create a persistent disk from an existing image, see the following code

for an example:

Compute compute = ComputeOptions.defaultInstance().service();

ImageId imageId = ImageId.of("debian-cloud", "debian-8-jessie-v20160329");

DiskId diskId = DiskId.of("us-central1-a", "test-disk");

ImageDiskConfiguration diskConfiguration = ImageDiskConfiguration.of(imageId);

DiskInfo disk = DiskInfo.of(diskId, diskConfiguration);

operation = compute.create(disk);

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

System.out.println("Disk " + diskId + " was successfully created");

} else {

// inspect operation.errors()

throw new RuntimeException("Disk creation failed");

}

Once a disk has been created, gcloud-java can also be used to create disk snapshots.

Snapshots can be used for periodic backups of your disks and can be created even while the disk is attached to a

running VM. Snapshots are differential saves of your disk state, which means that they can be created faster and at a lower cost when compared to disk images.

To create a snapshot for an existing disk in gcloud-java the following code can be used:

DiskId diskId = DiskId.of("us-central1-a", "test-disk");

Disk disk = compute.getDisk(diskId, Compute.DiskOption.fields());

if (disk != null) {

String snapshotName = "test-disk-snapshot";

Operation operation = disk.createSnapshot(snapshotName);

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

// use snapshot

Snapshot snapshot = compute.getSnapshot("test-disk-snapshot");

}

}

Create an External IP Address

External addresses can be assigned to a VM to allow communication with outside of Compute Engine:

a specific instance can be reached via its external IP address, as long as there is an existing

firewall rule to allow it. External addresses can be either ephemeral or static: ephemeral addresses

change everytime the instance is restarted or terminated; static addresses, instead, are tied to a project

until you explicitly release them. gcloud-java makes creating a static external address as simple as

typing the following code:

RegionAddressId addressId = RegionAddressId.of("us-central1", "test-address");

Operation operation = compute.create(AddressInfo.of(addressId));

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

System.out.println("Address " + addressId + " was successfully created");

} else {

// inspect operation.errors()

throw new RuntimeException("Address creation failed");

}

Create a Compute Engine VM

Once a disk and a static external address have been created, we have everything we need to create and start

a fully operational Compute Engine VM. The following gcloud-java code creates and starts a VM

instance using the just-created disk and address:

Address externalIp = compute.getAddress(addressId);

InstanceId instanceId = InstanceId.of("us-central1-a", "test-instance");

NetworkId networkId = NetworkId.of("default");

PersistentDiskConfiguration attachConfiguration =

PersistentDiskConfiguration.builder(diskId).boot(true).build();

AttachedDisk attachedDisk = AttachedDisk.of("dev0", attachConfiguration);

NetworkInterface networkInterface = NetworkInterface.builder(networkId)

.accessConfigurations(AccessConfig.of(externalIp.address()))

.build();

MachineTypeId machineTypeId = MachineTypeId.of("us-central1-a", "n1-standard-1");

InstanceInfo instance =

InstanceInfo.of(instanceId, machineTypeId, attachedDisk, networkInterface);

operation = compute.create(instance);

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

System.out.println("Instance " + instanceId + " was successfully created");

} else {

// inspect operation.errors()

throw new RuntimeException("Instance creation failed");

}

You can also create a VM instance using an ephemeral IP address. An ephemeral address will

be automatically assigned to the instance when using the following code:

Address externalIp = compute.getAddress(addressId);

InstanceId instanceId = InstanceId.of("us-central1-a", "test-instance");

NetworkId networkId = NetworkId.of("default");

PersistentDiskConfiguration attachConfiguration =

PersistentDiskConfiguration.builder(diskId).boot(true).build();

AttachedDisk attachedDisk = AttachedDisk.of("dev0", attachConfiguration);

NetworkInterface networkInterface = NetworkInterface.builder(networkId)

.accessConfigurations(NetworkInterface.AccessConfig.builder()

.name("external-nat")

.build())

.build();

MachineTypeId machineTypeId = MachineTypeId.of("us-central1-a", "n1-standard-1");

InstanceInfo instance =

InstanceInfo.of(instanceId, machineTypeId, attachedDisk, networkInterface);

operation = compute.create(instance);

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

System.out.println("Instance " + instanceId + " was successfully created");

} else {

// inspect operation.errors()

throw new RuntimeException("Instance creation failed");

}

I you haven’t created a disk already, you can also create a VM instance and create a boot disk

on the fly. When the following code, the instance’s boot disk will be created along with the instance

itself:

Address externalIp = compute.getAddress(addressId);

ImageId imageId = ImageId.of("debian-cloud", "debian-8-jessie-v20160329");

NetworkId networkId = NetworkId.of("default");

AttachedDisk attachedDisk =

AttachedDisk.of(CreateDiskConfiguration.builder(imageId)

.autoDelete(true)

.build());

NetworkInterface networkInterface = NetworkInterface.builder(networkId)

.accessConfigurations(AccessConfig.of(externalIp.address()))

.build();

InstanceId instanceId = InstanceId.of("us-central1-a", "test-instance");

MachineTypeId machineTypeId = MachineTypeId.of("us-central1-a", "n1-standard-1");

InstanceInfo instance =

InstanceInfo.of(instanceId, machineTypeId, attachedDisk, networkInterface);

Operation operation = compute.create(instance);

// Wait for operation to complete

operation = operation.waitFor();

if (operation.errors() == null) {

System.out.println("Instance " + instanceId + " was successfully created");

} else {

// inspect operation.errors()

throw new RuntimeException("Instance creation failed");

}

Do More

Other features are supported by gcloud-java: managing disk images, networks, subnetwors and more. Have a look at Compute’s examples

for a more complete set of examples. Javadoc for the latest version is also available

here.

26 Apr 2016

This post will show you how to get, install and use a free SSL certificate using Let’s Encrypt.

Let’s Encrypt is a free, automated, and open certificate authority (CA).

Let’s Encrypt not only provides free SSL certificates but also makes much easier the

previously tedious process of getting and renewing them.

1. Installing Let’s Encrypt

In this first step, we will install Let’s Encrypt tools on the machine where the SSL certificate

is going to be used.

First, clone the Let’s Encrypt repository:

git clone https://github.com/letsencrypt/letsencrypt

cd letsencrypt

Then run ./letsencrypt-auto --help, this will install some dependencies and configure the letsencrypt

environment. Towards the end of the command’s output you will see a line like the following:

Requesting root privileges to run letsencrypt...

sudo /home/<user>/.local/share/letsencrypt/bin/letsencrypt --help

From now on, you can run letsencrypt using:

sudo /home/<user>/.local/share/letsencrypt/bin/letsencrypt

2. Set Up Your Domain and Web Server

To verify that you own the domain for which you are trying to generate an SSL certificate, the letsencrypt

tool will generate a challenge that the tool will then try to access through your domain name.

The letsencrypt command to generate a certificate looks something like:

letsencrypt certonly --webroot -w /var/www/example -d example.com

Where example.com is the domain for which to generate the SSL certificate.

The tool will place the challenge file in the /var/www/example/.well-known/acme-challenge/ directory.

It will then look for the challenge at http://example.com/.well-known/acme-challenge/<challenge>.

For the certificate generation to succeed the challenge must be found, therefore you must:

- Have your DNS configured so that

example.com points to your machine

- Have your web server set up to serve files in

/var/www/example/.well-known/acme-challenge/.

It might be the case that you have a web server already configured. Consider, for instance, the case

where Nginx is running on your machine as a reverse proxy, not serving any static content.

Even in this case you can make letsencrypt work by ensuring that http://example.com/.well-known/acme-challenge/

properly serves the files in /var/www/example/.well-known/acme-challenge/. To do this add

the following to the proper server configuration in Nginx’s sites-enabled directory:

server {

...

location /.well-known/acme-challenge/ {

autoindex on;

root /var/www/example/.well-known/acme-challenge/;

}

...

}

3. Generate your SSL Certificate

Now that your DNS and web server are configured everything is set to generate the SSL certificate:

sudo letsencrypt certonly --webroot -w /var/www/example -d example.com

If run correctly this command will output the location of the generated certificates and key:

IMPORTANT NOTES:

- Congratulations! Your certificate and chain have been saved at

/etc/letsencrypt/live/example.com/fullchain.pem. Your cert will

expire on 2016-08-01. To obtain a new version of the certificate in

the future, simply run Let's Encrypt again.

- If you like Let's Encrypt, please consider supporting our work by:

Donating to ISRG / Let's Encrypt: https://letsencrypt.org/donate

Donating to EFF: https://eff.org/donate-le

In the /etc/letsencrypt/live/example.com/ directory you will find the following files:

cert.pem chain.pem fullchain.pem privkey.pem

Notice that Let’s Encrypt does not support wildcard certificates

(for the moment). However,

you can generate a certificate for more than one subdomain, as follows:

sudo letsencrypt certonly --webroot -w /var/www/example -d example.com -d www.example.com -d blog.example.com

For this command to succeed the challenge must be reachable through all the specified subdomains (e.g.

http://example.com/.well-known/acme-challenge/, http://www.example.com/.well-known/acme-challenge/ and

http://blog.example.com/.well-known/acme-challenge/ must all point to /var/www/example/.well-known/acme-challenge/).

4. Use Your SSL Certificate with Nginx

The generated certificates and key can be found in /etc/letsencrypt/live/example.com/:

cert.pem chain.pem fullchain.pem privkey.pem

To use the generated SSL certificate open your site’s configuration (in Nginx’s site_enabled directory)

and add the following:

server {

...

listen 443 ssl;

ssl_certificate /etc/letsencrypt/live/example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/example.com/privkey.pem;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

...

}

Now you can go visit https://example.com and enjoy your free SSL certificate.

01 Nov 2015

This post is for a friend of mine, so that he can stop bugging me.

I love git and I love Github. Contributing to projects on Github is cool but not always easy if you

don’t know where to start. This post presents a neat workflow for handling your Github forks

and pull requests.

Assume we forked the cool-people/cool-project repository and that we cloned it on our machine.

Adding the Upstream Remote

First, let’s add a remote for the original repository and call it upstream (you can pick

your preferred name here), it will come handy later.

git remote add upstream <address of cool-people/cool-project>

Creating a New Local Branch

As we are git people we don’t develop our new feature/fix/whatever in the master branch, right?

Let’s create a branch for our new feature and move to it

(name feature can be changed to your favourite name, choose a meaningful name):

git branch feature

git checkout feature

Or directly:

And here we go, writing amazing code.

Handling Conflicts with Upstream’s Master

Once we are done coding and we committed our changes we would like to send our feature back to

cool-people/cool-project’s master branch. But what if cool-people/cool-project has gone ahead?

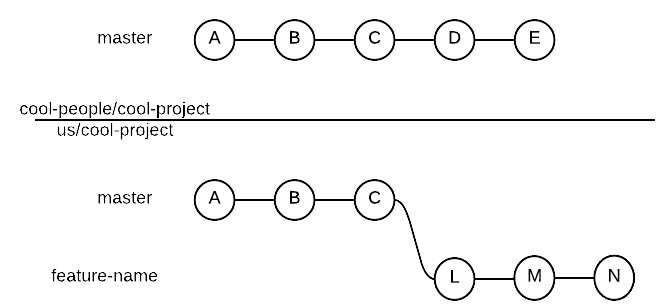

We might be in the following situation:

And even worse, imagine commits D and E are in conflict with our L, M and N commits.

That is, our feature now conflicts

with cool-people/cool-project’s master branch and we need to fix this before sending our pull request.

Let’s first update our master branch:

git checkout master

git fetch upstream

git merge upstream/master

We can update our remote master branch as well:

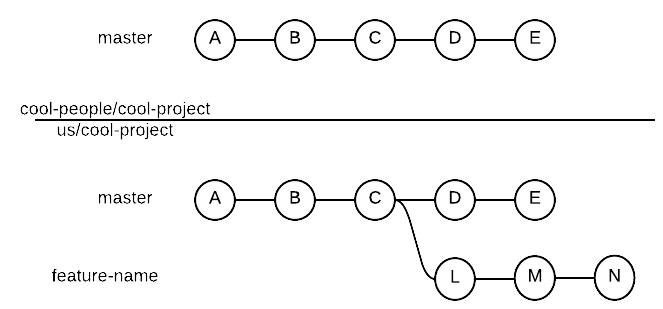

Now that our master branch is up-to-date with cool-people/cool-project’s master we are in the following state:

But we haven’t solved our conflict problem yet.

To import master changes into our feature branch and resolve conflicts we use git rebase:

git checkout feature

git rebase master

If there are conflicts bewteen our commits and master’s, the above command will tell us.

If that is the case it’s our duty to resolve conflits and git add the files we modified.

Once we resolved all conflicts we can continue rebasing with:

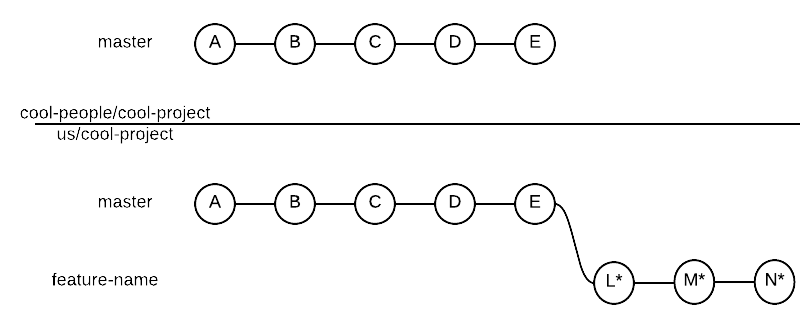

Rebase does the following:

- Imports all missing commits from

master into the feature branch

- Creates a new commit (L*, M* and N*) for each commit previously in the

feature branch

- Moves new commits (L*, M* and N*) after the last commit imported from

master

To better understand this, here’s our history state after rebasing:

Push our Changes

To update our remote feature branch after rebasing we can use the usual git push.

If we have never pushed the branch, we can simply do:

If we have already pushed it we must force a push, as rebase created non-fast-forward changes

(and git by default prevents us from pushig non-fast-forward changes):

git push origin feature -f

We can now go to Github and press the “Compare & pull request” button for the feature branch.

This will allow us to finalize our pull request.

Why Rebase?

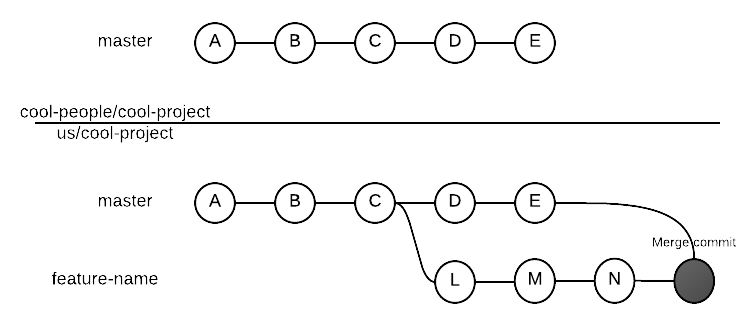

You might now be asking: why did we use git rebase rather than git merge?

The answer to this questions can be found in what merge does to the history, when compared with rebase.

Here’s what would have happened to our history if we used merge instead of rebase:

By sending a pull request from our feature branch we are asking cool-people/cool-project to add

to their history our commits. Do you think cool-people would ever be interested in adding to their

history a merge commit between your branch and their own stuff? I don’t think so.

Rebase, more that merge, can help you send pull requests with a clean history, avoiding commits

that add no meaningful information to the history.

Why Not Rebase?

WITH GREAT POWER THERE MUST ALSO COME – GREAT RESPONSIBILITY!

Rebase is a powerfull tool and helps you avoiding dirt in the history. However, as we’ve seen, rebase involves

creating non-fast-forward changes. Pushing such changes is never a good idea if your branch is used by

other people: rebased commits are new commits, users working on old ones will have a very unpleasent surprise

if you rebase their remote branch!

You can safely use rebase when your feature branch is local (i.e. you haven’t pushed it yet), or when you

are sure to be the only person working on that branch (which is often the case for pull requests).

25 Oct 2015

React.js is a web framework for user interfaces in Javascript.

React popularity is growing and with its popularity also the number of available tools and their quality.

Several applications can be found on Github to be used as a starting point for you own project.

Few examples can be found, however, on how to set up your production environment given

one of such boilerplates.

In this post I’ll show you how to configure a Google Compute Engine

VM to run the React.js stack.

I will use the React Redux Universal Hot Example

as it seemed to me one of the most complete.

It involves an API server written in Express and a React.js application. See

here for a complete

list of tecnologies.

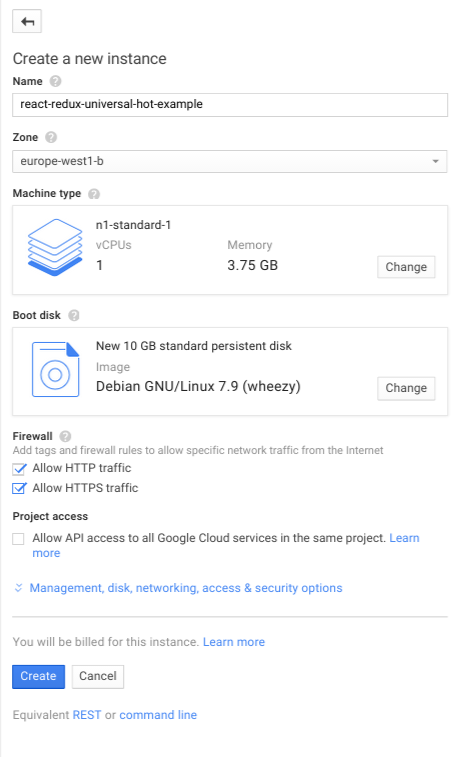

Creating a Compute Engine VM

If you haven’t already, create your Compute Engine project. From the web console create a

Debian GNU/Linux 7.9 “wheezy” VM of the preferred size in the preferred zone. Remember to

allow HTTP and HTTPS traffic by ticking the corresponding options:

Installing git and Node.js

First of all, let’s install git. This is as simple as typing:

To install Node.js you have several options, you can either download linux binaries from

here and put them into your path or compile the sources.

Let’s try the hard way and compile Node.js from source:

sudo apt-get install g++ make

wget https://nodejs.org/dist/v0.12.7/node-v0.12.7.tar.gz

tar -xvf node-v0.12.7.tar.gz

cd node-v0.12.7

./configure

sudo make install

Notice that trying to compile any version more recent than 0.12.7

will fail as it requires a more up-to-date version of g++, not available

in Debian “wheezy” official repos. If you need a more recent version I recomment you

to download the pre-compiled binaries.

Clone and Build the Example

With Node.js installed we can finally clone and build our example:

cd ~

git clone https://github.com/erikras/react-redux-universal-hot-example.git

cd react-redux-universal-hot-example

npm install

npm run build

Now we could start the example with a simple PORT=8080 npm run start but this will

give us very little control on started processes. What happens if Node.js fails? Where

are the logs put? Lucky for us, forever comes to the rescue.

Managing Services with forever

forever allows to continuosly run Node.js applicatios and to start, restart, stop

them (and much much more). I recommend you to consult forever --help and discover by yourselves

what forever can do for you. Firstly, install it by running:

sudo npm install -g forever

We can then use it to start our API server on port 3030.

NODE_PATH=./src NODE_ENV=production APIPORT=3030 forever start ./bin/api.js

As well as our React.js application (on port 8080):

PORT=8080 NODE_PATH=./src NODE_ENV=production APIPORT=3030 forever start ./bin/server.js

You can get the list of running services with forever list.

Now our React.js application is up and running on port 8080. You won’t be able to see it

from the outside of you VM as port 8080 is closed (unless you opened it).

Running Node.js on port 80 is, in general, not a good idea. To avoid doing this

we use nginx.

Configuring nginx

We configure nginx to serve as a reverse proxy server: nginx will

listen to connections on port 80 and forward the traffic to our React.js app,

running on port 8080.

First, install nginx with:

sudo apt-get install nginx

Then create a configuration file for our React.js application:

sudo touch /etc/nginx/sites-available/react

Now edit that file and put the following configuration in it:

server {

listen 80;

server_name react;

location / {

proxy_pass http://127.0.0.1:8080;

}

}

Before starting nginx we need to enable the configuration by putting it in

the sites-enabled directory (using a symlink):

sudo ln -s /etc/nginx/sites-available/react /etc/nginx/sites-enabled/react

And finally we start nginx:

Your React application is up and running charmly in production, visit it at http://<your VM address>.

Now you can go on developing some cool stuff!

27 Sep 2015

Node-TimSort is a Javascript implementation of the

TimSort algorithm developed

by Tim Peters, that showed to be incredibly fast on top of Node.js v0.12.7 (article

here). Node-TimSort is avalaible on

Github, npm and bower.

Given the (not so) recent update of Node.js to version 4 I decided to benchmark the module against Node’s

latest release (v4.1.1). Results follow in table:

| |

Execution Time (ns) |

Speedup |

| Array Type |

Length |

TimSort.sort |

array.sort |

| Array |

Length |

TimSort |

array.sort |

Speedup |

| Random |

10 | 1529 | 4804 | 3.14 |

| 100 | 16091 | 56875 | 3.53 |

| 1000 | 199985 | 704214 | 3.52 |

| 10000 | 2528060 | 9125651 | 3.61 |

| Descending |

10 | 1092 | 3680 | 3.37 |

| 100 | 2503 | 31799 | 12.70 |

| 1000 | 11821 | 543912 | 46.01 |

| 10000 | 98039 | 7768847 | 79.24 |

| Ascending |

10 | 1030 | 2062 | 2.00 |

| 100 | 2195 | 30635 | 13.95 |

| 1000 | 8715 | 502126 | 57.61 |

| 10000 | 72685 | 7581941 | 104.31 |

| Ascending + 3 Rand Exc |

10 | 1489 | 2503 | 1.68 |

| 100 | 4064 | 31230 | 7.68 |

| 1000 | 13647 | 515358 | 37.76 |

| 10000 | 106676 | 7549566 | 70.77 |

| Ascending + 10 Rand End |

10 | 1543 | 3162 | 2.05 |

| 100 | 6596 | 34657 | 5.25 |

| 1000 | 22121 | 501595 | 22.67 |

| 10000 | 127955 | 7240459 | 56.59 |

| Equal Elements |

10 | 1064 | 2172 | 2.04 |

| 100 | 2089 | 6645 | 3.18 |

| 1000 | 7640 | 42421 | 5.55 |

| 10000 | 59737 | 392580 | 6.57 |

| Many Repetitions |

10 | 1501 | 3183 | 2.12 |

| 100 | 18723 | 37164 | 1.98 |

| 1000 | 252043 | 559894 | 2.22 |

| 10000 | 3224430 | 7672401 | 2.38 |

| Some Repetitions |

10 | 1578 | 3260 | 2.07 |

| 100 | 18486 | 36732 | 1.99 |

| 1000 | 248599 | 544063 | 2.19 |

| 10000 | 3272074 | 7590367 | 2.32 |

Even with version 4.1.1 TimSort.sort is faster than array.sort on any of the tested array types.

In general, the more ordered the array is the better TimSort.sort performs with respect to array.sort

(up to 100 times faster on already sorted arrays).

Once again, the data also depend on the machine on which the benchmark is run.

I strongly encourage you to clone the repository

and run the benchmark on your own setup with: